Building your IT landscape on Akamai Connected Cloud – Part 3 – Writing down requirements

Part 1 – Intro

Part 2 – Defining the project

Part 3 – this post

Welcome to the third post of “Building your IT landscape on Akamai Connected Cloud” series.

In this post we will start writing and breaking down our FR’s and NFR’s.

What are those you may ask?

Non-Functional requirement (NFR)

In systems engineering and requirements engineering, a non-functional requirement (NFR) is a requirement that specifies criteria that can be used to judge the operation of a system, rather than specific behaviors. They are contrasted with functional requirements that define specific behavior or functions. The plan for implementing functional requirements is detailed in the system design. The plan for implementing non-functional requirements is detailed in the system architecture, because they are usually architecturally significant requirements.[1]

Broadly, functional requirements define what a system is supposed to do and non-functional requirements define how a system is supposed to be. Functional requirements are usually in the form of “system shall do <requirement>”, an individual action or part of the system, perhaps explicitly in the sense of a mathematical function, a black box description input, output, process and control functional model or IPO Model. In contrast, non-functional requirements are in the form of “system shall be <requirement>”, an overall property of the system as a whole or of a particular aspect and not a specific function. The system’s overall properties commonly mark the difference between whether the development project has succeeded or failed.

Non-functional requirements are often called the “quality attributes” of a system. Other terms for non-functional requirements are “qualities”, “quality goals”, “quality of service requirements”, “constraints”, “non-behavioral requirements”,[2] or “technical requirements”.[3] Informally these are sometimes called the “ilities“, from attributes like stability and portability. Qualities—that is non-functional requirements—can be divided into two main categories:

- Execution qualities, such as safety, security and usability, which are observable during operation (at run time).

- Evolution qualities, such as testability, maintainability, extensibility and scalability, which are embodied in the static structure of the system.[4][5]

It is important to specify non-functional requirements in a specific and measurable way.[6][7]

Functional requirements

In software engineering and systems engineering, a functional requirement defines a function of a system or its component, where a function is described as a specification of behavior between inputs and outputs.[1]

Functional requirements may involve calculations, technical details, data manipulation and processing, and other specific functionality that define what a system is supposed to accomplish.[2] Behavioral requirements describe all the cases where the system uses the functional requirements, these are captured in use cases. Functional requirements are supported by non-functional requirements (also known as “quality requirements”), which impose constraints on the design or implementation (such as performance requirements, security, or reliability). Generally, functional requirements are expressed in the form “system must do <requirement>,” while non-functional requirements take the form “system shall be <requirement>.”[3] The plan for implementing functional requirements is detailed in the system design, whereas non-functional requirements are detailed in the system architecture.[4][5]

As defined in requirements engineering, functional requirements specify particular results of a system. This should be contrasted with non-functional requirements, which specify overall characteristics such as cost and reliability. Functional requirements drive the application architecture of a system, while non-functional requirements drive the technical architecture of a system.[4]

Non-Functional requirements

Scalability: The architecture must support horizontal scaling by dynamically adding or removing servers based on demand. It should utilize auto-scaling groups and container orchestration platforms to efficiently manage resource allocation.

High Availability: The system must achieve a minimum uptime of 99.999%, employing fault-tolerant design patterns such as redundant server clusters, load balancers, and automatic failover mechanisms. It should utilize multi-region deployment to ensure high availability across geographically distributed data centers.

Performance: The system should maintain a maximum network latency of 50 milliseconds and aim for an average response time of under 100 milliseconds for all critical operations. It should optimize database queries, utilize in-memory caching, and employ content delivery networks (CDNs) for efficient content distribution.

Security: The architecture must enforce end-to-end encryption for data transmission, employing industry-standard cryptographic protocols (e.g., TLS) and secure key management practices. It should implement fine-grained access controls, two-factor authentication, and intrusion detection and prevention systems (IDS/IPS) to safeguard user data.

Data Backup and Recovery: The architecture should perform regular backups of user profiles, game progress, and configuration data, leveraging incremental backups and differential techniques for efficient storage utilization. It must implement backup redundancy across multiple geographically dispersed data centers and employ automated backup integrity verification.

Geographic Distribution: The system should utilize globally distributed data centers strategically placed to reduce network latency, leveraging content delivery networks (CDNs) and edge computing technologies. It should employ geo-routing techniques to direct users to the nearest data center, minimizing network hops.

Load Balancing: The architecture should employ dynamic load balancing algorithms based on factors like server capacity, network latency, and current workload. It should utilize intelligent load balancers that distribute traffic evenly across available servers while considering resource utilization metrics.

Elasticity: The system should dynamically scale compute and storage resources based on real-time demand, leveraging auto-scaling policies, and cloud provider-specific scaling features. It should employ predictive scaling algorithms based on historical usage patterns and anticipated traffic spikes.

Network Performance: The architecture must ensure low packet loss rates (<0.5%) and maintain a minimum network bandwidth of 100 Gbps for optimal delivery experiences. It should utilize advanced network protocols and traffic optimization techniques (e.g., congestion control, Quality of Service) to minimize latency and packet jitter.

Cross-Platform Compatibility: The system must provide consistent gameplay experiences across various platforms (Windows, macOS, Linux, Xbox, PlayStation, iOS, Android). It should support platform-specific optimizations (e.g., DirectX, Vulkan) and employ adaptive streaming technologies to adjust game quality based on device capabilities.

Content Delivery: The architecture should leverage a globally distributed content delivery network (CDN) with edge caching to accelerate game content delivery. It should utilize HTTP/2 or QUIC protocols for efficient content transmission and employ delta compression and differential updates to minimize bandwidth usage during game updates and patches.

Integration with Third-Party Services: The system should provide well-documented APIs and SDKs for seamless integration with payment gateways, social media platforms, and analytics tools. It should support OAuth 2.0 for secure user authentication and authorization, and employ asynchronous messaging protocols (e.g., AMQP) for reliable inter-service communication.

Compliance and Legal Requirements: The architecture must comply with relevant data protection regulations (e.g., GDPR, CCPA), ensuring user consent management, anonymization of personal data, and secure storage practices

Monitoring and Analytics: The architecture should include a comprehensive monitoring and analytics framework that collects real-time performance metrics, system logs, and user behavior data. It should leverage centralized logging systems, distributed tracing, and log aggregation tools for efficient monitoring and troubleshooting. Additionally, it should employ machine learning algorithms and anomaly detection techniques to identify potential security threats and performance bottlenecks.

Modularity and Extensibility: The architecture should be designed with a modular and extensible approach, using microservices and service-oriented architecture (SOA) principles. It should allow for the seamless integration of new game titles, features, and external services by leveraging containerization technologies (e.g., Docker) and orchestration platforms (e.g., Kubernetes). The system should facilitate independent deployment and scalability of individual components without impacting the overall system performance or user experience.

FUNCTIONAL REQUIREMENTS

Game Storage and Management: The infrastructure should provide a scalable and efficient storage solution for hosting game files, patches, updates, and downloadable content. It should support large file storage and provide mechanisms for organizing and managing game assets.

Content Distribution: The infrastructure should incorporate a content delivery network (CDN) or a similar mechanism to distribute game files and updates to users globally. It should ensure low-latency and high-bandwidth content delivery for optimal user experience.

File Compression and Optimization: The infrastructure should include tools and processes to compress and optimize game files, reducing their size without compromising quality. This helps minimize bandwidth requirements and improves download and installation times.

Bandwidth Management: The infrastructure should monitor and manage bandwidth usage effectively to ensure fair allocation and prevent congestion during peak usage periods. It should implement traffic shaping or rate limiting mechanisms to optimize network performance.

Dynamic Scaling: The infrastructure should support dynamic scaling to accommodate fluctuations in user demand for game downloads and updates. It should automatically scale resources, such as storage capacity and network bandwidth, to handle increased traffic and ensure fast and reliable file delivery.

Redundancy and Data Replication: The infrastructure should implement redundancy and data replication mechanisms to ensure high availability and data durability. It should replicate game files across multiple storage nodes or data centers to mitigate the risk of data loss and minimize downtime.

Parallel Processing: The infrastructure should leverage parallel processing techniques to optimize the distribution of large game files. It should split files into smaller chunks and distribute them concurrently to speed up downloads and ensure efficient utilization of network resources.

File Integrity Verification: The infrastructure should incorporate mechanisms to verify the integrity of game files during storage and transmission. It should use checksums or other hashing algorithms to detect and handle corrupted or tampered files, ensuring users receive error-free game content.

Metadata Management: The infrastructure should provide a robust metadata management system to store and retrieve information related to game files. It should support efficient indexing and searching of game metadata, enabling users to discover and access relevant game content easily.

Version Control and Rollbacks: The infrastructure should facilitate version control and rollbacks for game files and updates. It should enable users to access previous versions of games and easily revert to a stable version in case of issues with new releases.

Secure File Transfer: The infrastructure should ensure secure transmission of game files and updates over the network. It should utilize encryption protocols (e.g., SSL/TLS) to protect data during transit, preventing unauthorized access or tampering.

Geo-replication and Regional Caching: The infrastructure should support geo-replication and regional caching of game files to reduce latency and improve download speeds for users in different geographical locations. It should strategically place storage nodes or caching servers in proximity to users for efficient content delivery.

User Account Storage: The infrastructure should provide secure and scalable storage for user account data, including profiles, preferences, and game libraries. It should ensure fast retrieval of user-specific information to enable personalized experiences across different devices.

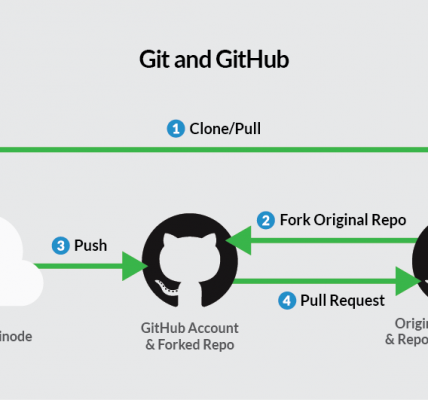

API for Content Management: The infrastructure should offer APIs for seamless integration with game developers and content providers. It should provide functionality to upload, manage, and distribute game files programmatically, allowing for automated content ingestion and updates.

Usage Analytics and Reporting: The infrastructure should include analytics and reporting capabilities to track usage statistics, such as download counts, bandwidth consumption, and user engagement with game content. It should provide insights to improve content delivery strategies and optimize resource allocation.

In the next post, we will finally get to the “meaty” part and start drawing some architecture diagrams and writing some code.

Cheers, Alex.