Building your IT landscape on Akamai Connected Cloud – Part 2 – Defining the project

Related posts

Part 1 – Intro

Part 2 – this post

Welcome to the second part of the “Building your IT landscape on Akamai Connected Cloud” series.

In this post we will define the basic scenario and requirements for our “demo” project which we will build on Akamai Connected Cloud.

SCENARIO

We have a company called “Vapor” which is an online only company selling games and offering them for download.

Having bad experiences with a MSP, they turned to Akamai for help.

Besides aiming for better performance, Vapor’s DevOps team wanted to be more hands-on and have the ability to tweak the things “under the hood” so the entire solution can be rearchitected down the line to fit the new cloud provider.

Project has two major phases; first and critical phase is to do a lift and shift of the current platform and improve it with minimum amount of effort; introduce monitoring and management software and create pipelines. Current solution experiences a lot of downtime which directly correlates to lost revenue.

Second phase is to containerize and modernize the entire stack by utilizing Akamai’s managed Kubernetes service (LKE) and compute capabilities. Additionally, Vapor has plans to establish presence in APAC, EMEA and AMER regions in the near future so that requirement should be taken into consideration while designing the infrastructure.

Current Tech stack

Custom made application based on PHP and MySQL with few thousands of articles.

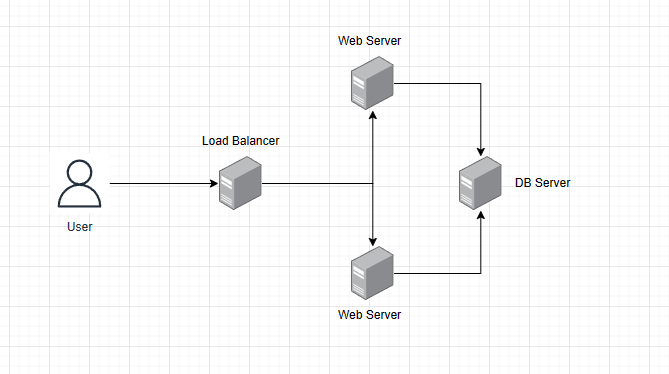

Application is running on eight web servers behind a load balancer. Standalone host is running MySQL database engine, while game data is stored in object storage 200 TB in size.

Hardware specifications

- 8 x Web servers: 16 CPU cores, 64 GB RAM, 200 GB SSD

- Average CPU usage is 70%

- 80% memory usage

- 400 IOPS on average

- Database server: 32 CPU cores, 128 GB RAM, 500 GB SSD

- DB is around 300 GB and growing 10% every quarter

- 90% RAM usage

- 5500 IOPS on average, with occasional spikes to 10000 IOPS

- Load balancer

- 1000 requests/s on average, with occasional spikes to 2500 req/s.

- Object storage

- Currently hosted with a different cloud provider

Monitoring and management is handled by the current MSP, so we have an open playing field when it comes to choosing technologies which we will use for monitoring, access, security, etc…

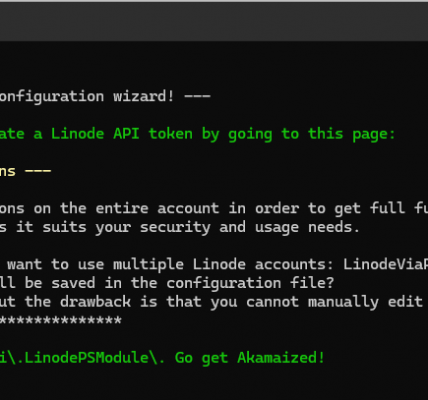

Simplistic overview of the current infrastructure

Where do we want to be in the future?

Before we start writing any code (yes, everything we will do will be done in code), we need to define our project goals, functional and non-functional requirements and try to predict the future a bit 🙂

Let’s start with the main project goals;

- Improve availability

- Lower hosting costs compared to the current provider

- Improve performance

- Build for scale

- Accommodate new modernization efforts which are in the pipeline

- Support world wide presence with the ability to do edge computing in the future

- Make it secure

- Make it easy to run

- Make it automated

- Make developers happy <3

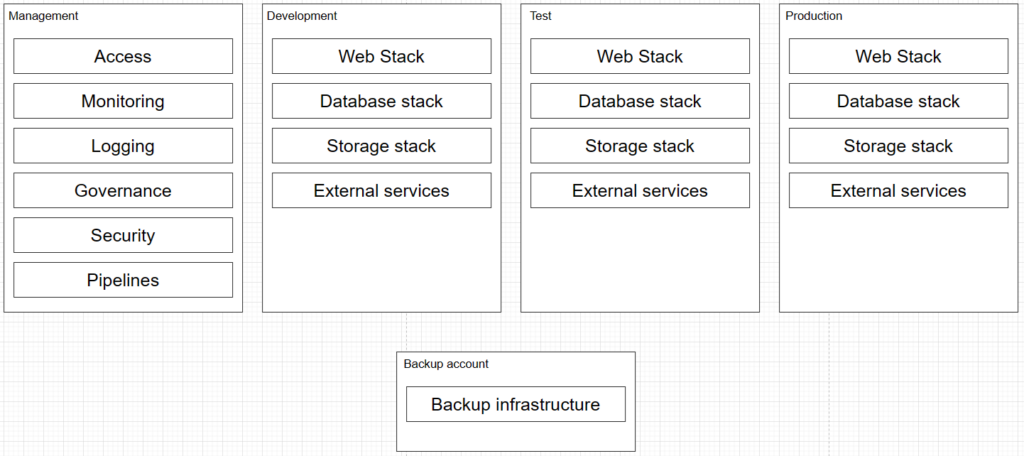

Overview of the new infrastructure layout

As you can see, our infrastructure layout can be broken down into classic DT(A)P approach, along side a management and backup account.

Our management account will run all “operational” services we need to run our infrastructure, like monitoring, build and deployment pipelines, security tooling, secure access services, etc…, while we will have Development, Test and Production accounts for our dev/test and production workloads.

Goal will be to make our development, test and production accounts identical in every aspect besides scale. That will ensure that all infrastructure and application tests we will be running are giving realistic results.

Finally, we will have a dedicated Akamai Connected Cloud account in a different region where we will run our backup software and DR infrastructure.

Additionally, any Akamai Connected Cloud service we might need (like virtual machines, object storage, LKE clusters, etc…) will also be deployed in it’s own corresponding (DTAP) account.

Our intention is to have all environments physically and logically separated as much as possible.

Entire infrastructure will be built using code, primarily Terraform and Ansible for the first phase, while for the second phase, we will look into using some Kubernetes and application management tooling and pipelines. TBD.

In the next post we will start to define our functional and non-functional requirements for the entire project, drilling down into specific category and start defining our roadmaps.

Cheers, Alex.