Your own AI coding assistant running on Akamai cloud!

What? You want some AI to write my code?

AI-powered coding assistants are the main talk in the developer world for a while, there’s no denying that. I can’t count the times I’ve read somewhere the AI will replace developers in the next X years. You’ve probably seen tools like GitHub Copilot, ChatGPT, or Tabnine popping up everywhere.

They promise to boost productivity, help with debugging, and even teach you new coding techniques. Sounds amazing, right? But like anything, AI-powered coding assistants have their downsides too. So, let’s talk about what makes them great—and where they might fall short.

Why AI Coding Assistants Are a Game-Changer

Obviously, one of the biggest advantages of using an AI assistant is the time it saves. Instead of writing the same repetitive boilerplate code over and over and over again, you can generate it in seconds. Need a quick function to parse JSON? AI has you covered. Easy peasy! Stuck on how to structure your SQL query? Just ask. This means less time spent on the boring stuff and more time on actual problem-solving.

AI is also a fantastic debugging tool. It can analyze your code, catch potential issues, and suggest fixes before you even run it. Instead of spending hours combing through error messages and Stack Overflow threads, you get quick, relevant suggestions that help you move forward faster.

And let’s not forget about learning. If you’re picking up a new language or framework, an AI assistant can guide you with real-time examples, explain unfamiliar syntax, and even generate sample projects. It’s like having a 24/7 coding mentor who doesn’t judge your questions.

Beyond just speed and learning, AI can actually help improve code quality. It can suggest best practices, helps format your code, and even recommends refactoring when your code gets messy. Plus, if you’re working in a team, it can assist with keeping code style consistent and even generate useful commit messages or documentation. Wouldn’t it be cool if we could plug the AI into our pipeline and make sure that all rules are being followed?

The downsides no one(everyone) talks about?

As cool as AI coding assistants are, you don’t need to be a genius to see that they’re far from perfect. One of the biggest concerns I personally see is over-reliance. If you’re constantly relying on AI to write your code, do you really understand what’s happening under the hood? This can be a problem when something breaks, and you don’t know how to fix it because you never really wrote the thing in the first place 🙂 I’m sure you love reading someone else’s codebase and debugging that <3

Another issue is that AI-generated code isn’t always optimized or even correct! It might suggest something that works but isn’t efficient, secure, or maintainable. If you blindly accept AI suggestions without reviewing them, you could end up with a mess of inefficient or buggy code.

Then there’s the question of security. AI assistants are trained on huge datasets, and sometimes they can generate code that includes security vulnerabilities. If you’re working on sensitive stuff, you have to be extra careful about what code you’re using and where it’s coming from.

Let’s talk privacy! Many AI coding tools rely on cloud-based processing, meaning your code might be sent to external servers for analysis. If you’re working on proprietary or confidential code, you need to be aware of the risks and check the privacy policies of the tools you’re using.

And finally, while AI can make you more productive, it can also be a bit of a crutch. Some developers might start relying too much on AI for even basic things, which can slow down their growth and problem-solving skills in the long run.

So, Should You Use One?

AI coding assistants are undeniably powerful tools, but they work best when used wisely. They’re great for boosting productivity, helping with debugging, and learning new technologies—but they shouldn’t replace actual coding knowledge and problem-solving skills. Think of them as a really smart assistant, not a replacement for your own expertise.

If you use AI responsibly—review its suggestions, stay mindful of security risks, and make sure you’re still learning and improving as a developer it can be a fantastic addition to your workflow, just don’t let it do all the thinking for you 🙂

Still interested and want to start using AI in your daily work? Enter bolt.diy 🙂

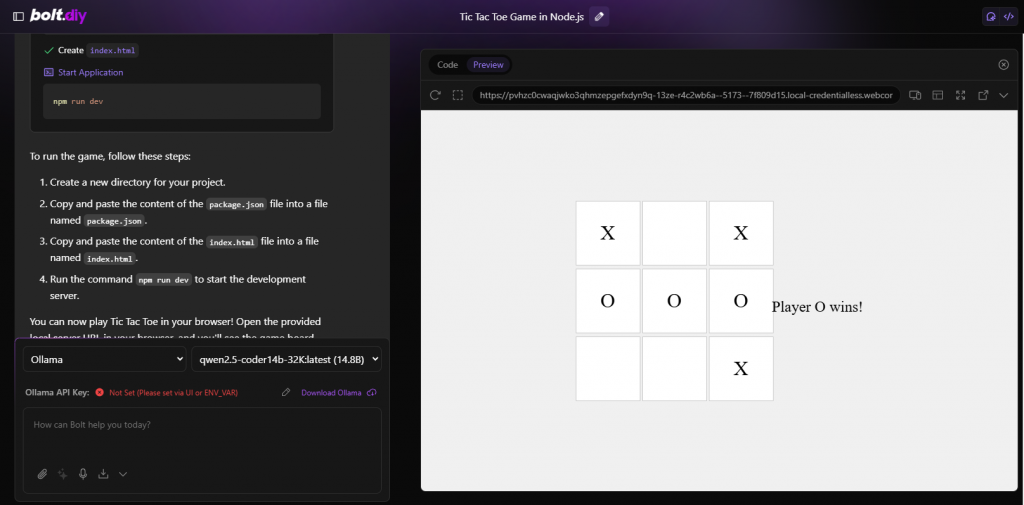

bolt.diy is the open source version of Bolt.new (previously known as oTToDev and bolt.new ANY LLM), which allows you to choose the LLM that you use for each prompt! Currently, you can use OpenAI, Anthropic, Ollama, OpenRouter, Gemini, LMStudio, Mistral, xAI, HuggingFace, DeepSeek, or Groq models

bolt.diy was originally started by Cole Medin but has quickly grown into a massive community effort to build the one of the open source AI coding assistants out there.

What do I need to get this deployed?

Well, just Terraform and a Linode account.

In the backend we will deploy a VM with a GPU attached, install bolt.diy, ollama and ask it to write some code! Maybe a simple Tic-Tac-Toe game? 🙂

Ideally you would run your bolt.diy deployment on a separate machine from the machine running the model, but for our use case, current deployment model is more than enough.

Like most of the things on this blog, guess what we’re gonna use? Yes! IaC!!! 😀

Here’s a link to the Github repository containing the Terraform code.

Code will do the following:

- Deploy a GPU based instance in Akamai Connected Cloud

- Use cloud-init to install the following:

- curl

- wget

- nodejs

- npm

- nvtop – great tool to monitor your GPU usage

- Nvidia drivers

- Deploy and configure a firewall which will allow SSH and bolt.diy access from your IP.

- Configure bolt and ollama to run as a Linux service. For ollama service, we are always making sure we have a model downloaded and created with 32K context size.

How do you deploy it?

Just fill in your Linode API token and the desired region, Linode token and your IP address in variables.tf file and run the following commands:

git clone https://github.com/aslepcev/linode-bolt.diy

cd linode-bolt.diy

#Fill in the variables.tf file now

terrafom init

terraform plan

terraform applyAfter a short 5-6 minute wait, everything should be deployed and ready to use. Go ahead and visit the IP address of your VM on the port 5173.

Example url: http://172.233.246.209:5173

Make sure that Ollama is selected as a provider and you’re off to the races!

What can it do?

Well, it really depends on the model we are running. With the RTX 4000 Ada GPU, we can comfortably run a 14B parameter model with 32K context size which is “ok” for smaller and simpler stuff.

I tested it out with a simple task of creating a Tic-Tac-Toe game in NodeJS. It got the functionality right the first time, but it looked like something only a mother could love 🙂

I just told it to make it a bit prettier and add some color; these were the results I got:

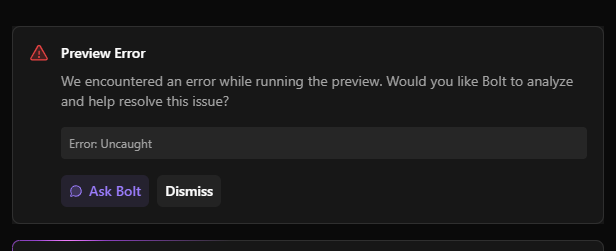

Interestingly, during the coding process, it made a mistake which it managed to identify and fix all on its own! All I did was press the “Ask Bolt” button.

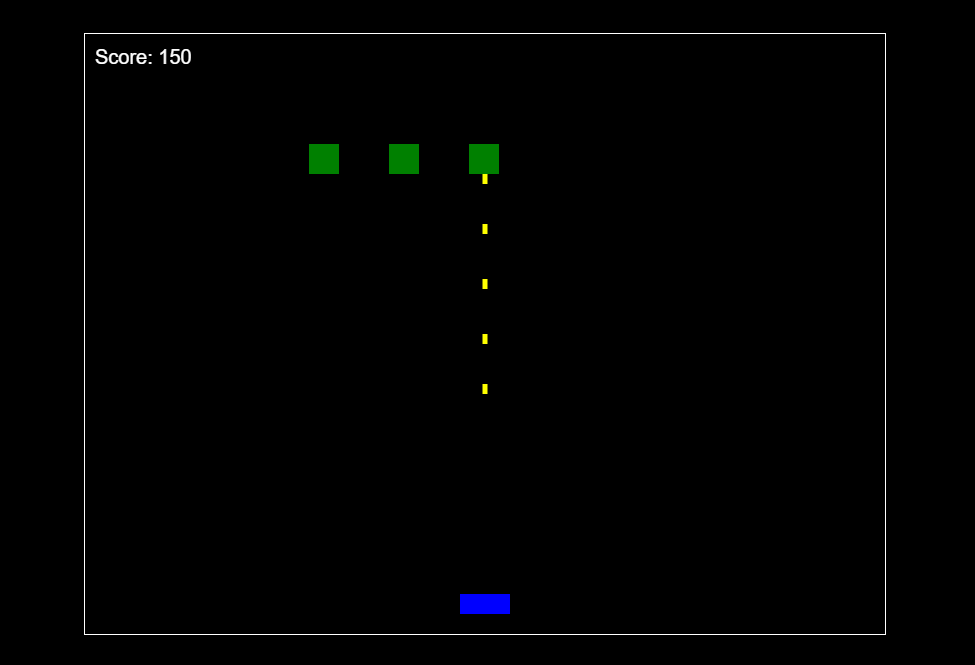

Also, here’s a fully functioning Space Invaders alike game which it also wrote

What if I want to run a larger model? 32B parameters or even larger?

That’s very easy! Since Ollama can use multiple GPU’s, all we need to do is scale up the VM we are using to the one which includes two or more GPU’s. Akamai offers maximum of 4 GPU’s per VM which brings up to 80 GB of VRAM which we can use to run our model. I will not experiment with larger models in this blog post; this is something we will benchmark and try out in the future.

Cheers! Alex.

P.S – parts of this post were written by bolt.diy 🙂