DeepSeek-R1 on Akamai Connected Cloud

Before we dive into installation and real usage, let’s talk about DeepSeek-R1 a bit and why it’s a big thing.

The team behind DeepSeek-R1-Zero took a cool approach to advancing large language models by skipping supervised fine-tuning (SFT) entirely and jumping straight into reinforcement learning (RL).

This resulted in a model capable of self-verification, reflection, and generating intricate chain-of-thoughts (CoTs) to solve complex problems. What’s borderline groundbreaking here is that this is the first open research to show RL alone can incentivize reasoning in LLMs, without the need for SFT. Development pipeline for DeepSeek-R1 includes two RL stages to improve reasoning patterns and align with human preferences, along with two SFT stages to seed the model’s reasoning and non-reasoning capabilities.

They didn’t stop at advancing reasoning in large models—they also focused on making smaller models more powerful! By distilling the reasoning patterns of larger models into smaller ones, they achieved better performance than what RL alone could achieve on small models.

Using DeepSeek-R1’s reasoning data, they fine-tuned several dense models and demonstrated exceptional benchmark results. To further benefit the community, they’ve open-sourced distilled checkpoints ranging from 1.5B to 70B parameters, based on the Qwen2.5 and Llama3 series.

What is distillation you may ask?

In the context of AI, distillation (short for knowledge distillation) is a technique used to transfer knowledge from a larger, more complex model (called the teacher) to a smaller, simpler model (called the student). The goal is to make the smaller model perform as well as—or almost as well as—the larger model, while requiring significantly fewer resources (e.g., memory, computation).

How Distillation Works:

- Training the Teacher Model:

A large model is trained first. This model is usually highly expressive, capable of capturing complex patterns in the data, but it may be too computationally intensive for practical deployment. - Soft Labels as Knowledge:

Instead of just training the student model on the original dataset, the student learns from the soft predictions of the teacher model. These predictions include probability distributions over all possible outcomes (rather than binary correct/incorrect labels), which contain richer information about the relationships between classes. - Student Model Training:

The student model is trained to mimic the teacher’s behavior using a combination of:- The soft predictions from the teacher model (knowledge transfer).

- The original ground-truth data.

Why Distillation is Useful:

- Efficiency: Smaller models are faster, require less memory, and can run on devices with limited computational power (like mobile phones or edge devices).

- Scalability: By distilling large models into smaller ones, researchers and developers can deploy AI more broadly while maintaining strong performance.

- Accessibility: Distilled models often serve as the foundation for open-source projects, making advanced AI capabilities more accessible to the community.

Ok, let’s get to the interesting part and deploy our model. We can break down the entire deployment in 4 easy steps.

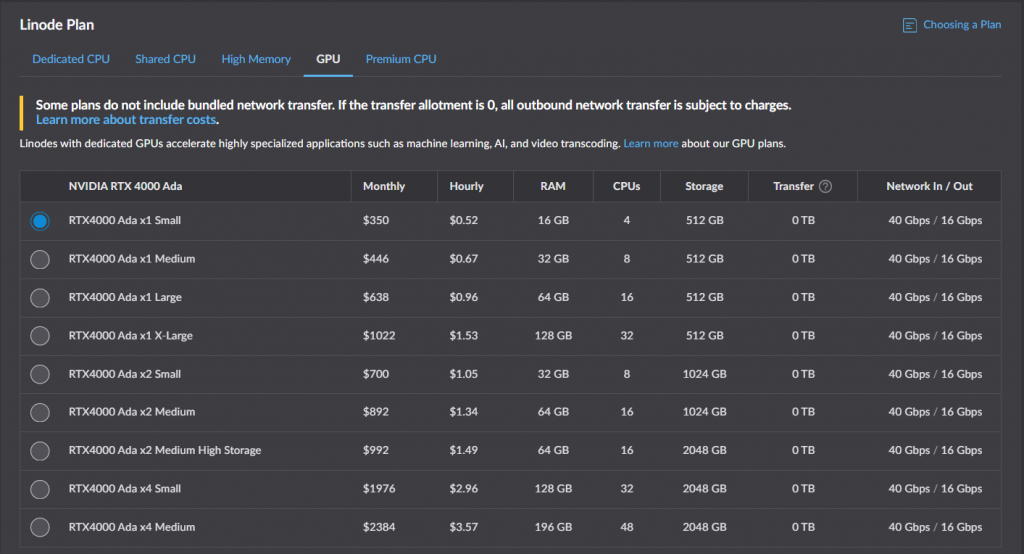

Step 1 – Deploy a GPU VM in Linode. I’m gonna deploy the smallest GPU VM available “RTX4000 Ada x1 Small” which costs 350$ per month.

Step 2 – Connect using SSH to your instance and install Nvidia drivers

Since I’m using Debian 12 as my OS, installing Nvidia drivers was quite easy following official instructions – https://wiki.debian.org/NvidiaGraphicsDrivers

After the driver has been install, I suggest you also install “nvtop” using:

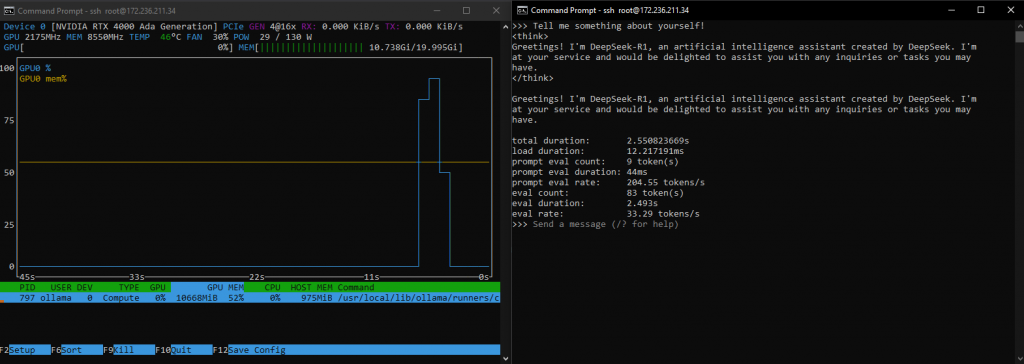

apt install nvtopnvtop is a cool utility which can monitor the usage of your GPU. Think of it like a “top” command, but for GPU’s.

Step 3 – Install Ollama

Installing Ollama is extremely simple, all you need to do it run a single command.

curl -fsSL https://ollama.com/install.sh | shStep 4 – Download and run the DeepSeek-R1 model into Ollama. Since the GPU I will be using has 20 GB of VRAM, we can fit a 14B model without any issues.

–verbose flag tells Ollama to display performance metrics after the generation has been completed.

ollama run deepseek-r1:14b --verbose

Let’s ask a simple question and see how it performs: What is distilling in the context of AI?

<think>

Hmm, distillation… I think it’s related to machine learning, specifically in training

models. Maybe it’s about transferring knowledge from one model to another? Let me recall.

Oh right, model distillation is the process where you take a complex, large model and

create a smaller, more efficient version that retains the same performance.Wait, there are different types of distillation too. I should mention those. There’s

knowledge distillation where you transfer knowledge from a teacher model to a student

model. Then there’s parameter-efficient methods like fine-tuning or adapter-based

approaches. Also, pruning and quantization techniques come under distilling in terms of

optimizing models.I need to make sure the explanation is clear but not too technical. The user might be

someone new to AI or looking to understand optimization techniques. They probably want a

practical understanding without getting bogged down by jargon.Maybe I should start with the general idea, then break it down into types, and explain

each briefly. Also, highlighting why it’s important—like efficiency, scalability,

deployment on devices—would be helpful for them to grasp its value.I should keep the response structured but conversational, avoiding any markdown. Just

plain, easy-to-understand English. Let me put that together.

</think>In the context of AI and machine learning, distilling refers to a technique where

knowledge or information from one model is transferred to another, often simpler or

smaller model. This process aims to create a more efficient version of the original model

while preserving its performance.Key Ideas:

- Model Distillation:

- It involves training a “student” model to mimic the behavior or decisions of a

larger, more complex “teacher” model.- The teacher model is typically pre-trained on a large dataset and has high accuracy

but may be computationally expensive or difficult to deploy.

- Types of Distillation:

- Knowledge Distillation: The teacher model’s predictions (soft labels) are used to

train the student model, which can lead to better generalization compared to using only

hard labels.- Parameter-Efficient Methods: Techniques like fine-tuning, adapter-based

approaches, or prompt tuning allow the transfer of knowledge without copying all

parameters from the teacher model.- Pruning and Quantization: These are techniques used to reduce the size of a model

by removing unnecessary weights (pruning) or reducing the precision of the weights

(quantization).

- Applications:

- Distillation is widely used to deploy AI models on edge devices where computational

resources are limited.- It also helps in scaling down large models for faster inference while maintaining

performance.In summary, distilling in AI is about making complex models more efficient and accessible

by transferring their knowledge to simpler or smaller models.

total duration: 19.004002094s

load duration: 12.267119ms

prompt eval count: 105 token(s)

prompt eval duration: 48ms

prompt eval rate: 2187.50 tokens/s

eval count: 615 token(s)

eval duration: 18.938s

eval rate: 32.47 tokens/sOne major difference between DeepSeek-R1 and majority of other generative AI models is that DeepSeek will show you the “train of thought” it used to get to the answer, not just the answer itself.

Once we have the model running locally, we can turn it into our own personal AI assistant by using Open-WebUI or turn it into your own coding assistant using Bolt.diy.

Ollama also has it’s on API which you can use to embed the model into your own applications.

Possibilities are endless!

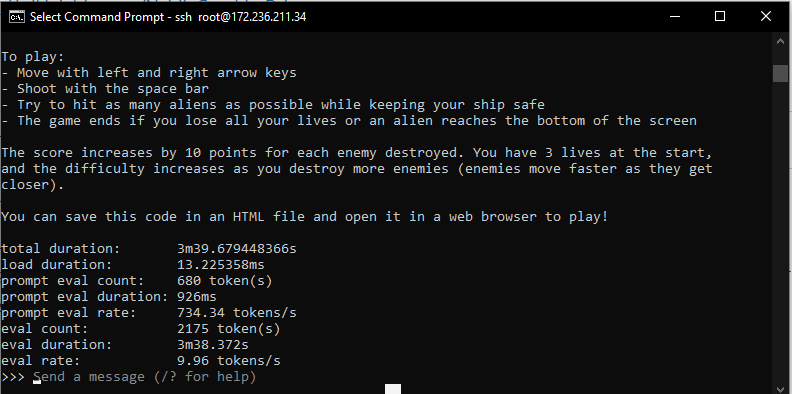

BTW, we can even run a 32B parameter model, but the performance drops to 10 tokens per second and spills a bit into the RAM and CPU, but nothing drastic. Model is still fully usable and comparable to ChatGPT on a busy day.

Results you can see on the image are based on the request to build me “Space Invaders” game in HTML.

Until next time where we will take a look into bolt.diy and deploy our own coding assistant!

Alex!

Very cool, thanks for sharing! What is your estimated costs, monthly, if you were to leave this running full time?

Hello Dennis, this specific configuration would cost you 350$ per month on Akamai, running 24/7